FAST™ and CCSS: Friends but not Relations

By: Ed O’Connor, Ph.D.

The Common Core State Standards (CCSS) were developed by the National Governor’s Council and offered for possible adoption by U.S. to address the lack of consistency in what is taught across schools in different states. Since the release of the CCSS in 2010, educators have been paying close attention to issues of alignment among curricula, assessments and the CCSS. These alignment considerations have generally been grounded by a basic understanding that if students are expected to demonstrate the knowledge and skills articulated in these standards we must be certain that the content of instruction delivered includes the appropriate information, and instruction that will lead to student mastery of these learning outcomes.

While the CCSS were intended to bring coherence and consistency across states with regard to expected educational outcomes to be achieved by grade for students in U.S. public schools, the expected adoption of a common set of state standards by all states ultimately was not realized. Initially, two states (Alaska and Texas) opted not to adopt the standards. Later, several states dropped their adoption and chose instead to identify state standards specific to these individual states. Thus, what was intended to be an effort at alignment of standards across states for improving clarity and consistency of expected learning outcomes has resulted in a perceived ongoing inconsistency of expectations across states. At present, this situation has resulted in confusion among educators who are trying to understand the learning expectations for their grade or content area. This confusion is most pronounced for educators in states that have adopted their own standards.

In this context, educators have also been paying close attention to the content evaluated in various assessment tools and routines. The logic here has been that if CCSS are the priority learning objectives then all assessments should provide information specific to those learning objectives. While this logic is sound, this understanding often results in oversimplification and inaccurate assumptions about how we should evaluate assessment tools and routines. This blog article will discuss the key issues related to the alignment of assessments with CCSS and will identify several key parameters to be considered when evaluating assessments for alignment to state standards including CCSS. Following these general guidelines will be a specific discussion of the alignment of the FASTBridge assessment tools with state standards. It is hoped that this discussion will help educators better understand the issues surrounding discussions of alignment of assessment with state standards.

Considering State Standards and Common Core

As of January 2017, 42 U.S. states and five territories, including the District of Columbia, have adopted the CCSS in both subject areas of English Language Arts, and Mathematics. Minnesota has adopted the CCSS in English/Language Arts but has retained its own standards in Math. Alaska, Indiana, Nebraska, Oklahoma, South Carolina, Texas, and Virginia have adopted their own state standards (National Governors Association Center for Best Practices and Council of Chief State School Officers, 2010)). Thus, all 50 states and the District of Columbia have established and articulated learning standards that are targeted at preparing students for “College and Career Readiness” by the end of grade 12.

A closer look at the standards in states that have adopted their own reveals that these “alternative standards” are more similar than one might expect. Overall, state-specific standards have generally been determined to be similarly clear and rigorous (“Too Close to Call”) as compared to CCSS or have been evaluated to be less clear and rigorous (“Clearly Inferior”) than CCSS. Only one state, Indiana, earned a rating of having “Clearly Superior” standards in English Language Arts. No states achieved a rating of “Clearly Superior” on Math standards (Fordham Institute Report, 2010). A review of state standards data reveals that, since the introduction of CCSS, state proficiency standards have uniformly increased in rigor substantially and the differences in standards has narrowed considerably with the most dramatic narrowing occurring between 2013 and 2015 (Peterson, Barrow, & Gift, 2016).

These analyses of CCSS and individual state standards clearly demonstrate that learning standards have become substantially more consistent across states since the introduction of the CCSS, regardless of whether or not states have chosen to adopt the formal CCSS or create their own. Further, the evaluation by the Fordham Institute suggests that the CCSS are generally more clear and rigorous when compared to state-developed standards. Considering this, assessments aligned to CCSS would be expected to effectively predict achievement of state standards regardless of whether or not the state has formally adopted the CCSS. At worst, when predicting percentages of students meeting state proficiency standards, measures aligned with CCSS would be expected to underestimate performance on state tests. In this case the surprise factor would be a happy one, as more students than predicted would achieve proficiency on the state test.

Alignment of Assessments to Standards

When considering the alignment of assessment tools to state standards, it is important to consider two parameters: (a) alignment of test content to state standards, (b) classification accuracy. In addition, it is important to consider whether the assessment purpose is formative, intermediate (benchmark) or summative. Finally, when considering alignment of assessments with state standards, one must realize that there are differences in the way one evaluates assessment alignment to standards as compared to the way one evaluates the alignment of curriculum with standards.

Alignment of Test Content

As discussed earlier, a broad purpose of assessment is to be able to make decisions regarding whether or not a student has achieved the knowledge or skill articulated in the standard. This element is fairly straightforward and can be achieved so long as the assessment contains items that are legitimately representative of the knowledge or skill described. In addition, the assessment procedures and format would need to be demonstrably effective for capturing students’ actual capacity relative to the standard. Evaluation of assessments in this area usually involves developing a table that contains a column of descriptors of the standard with examples of the associated test items that are believed to reflect each standard element presented in a second column. Another strategy for demonstrating alignment is to categorize tests or subtests by standards they assess.

Classification Accuracy

A second consideration has to do with the specific assessment question that is being investigated. In the domain of assessment, questions to be answered generally require classifying test results as indicative of mastery or proficiency of performance. Therefore, a key issue in determining alignment is the degree to which assessment results lead to correct classification of test scores and, by extension, correct interpretations of student performance relative to learning objectives and standards.

It is relatively straightforward to evaluate whether or not an assessment is effective for evaluating mastery of specific and narrowly-defined facts or skills. However, it quickly becomes more complicated when one hopes to use assessment to reflect a student’s mastery of a broad skill domain such as Reading, Language Arts, or Mathematics. In these cases, there are simply too many subskills and too many application contexts possible to assess all possible combinations and types of skills. In these circumstances it is necessary to select a sample of items from the broad domain in an attempt to estimate the student’s likely overall capacity. This requires that the items selected are representative of skills across the domain and include a sufficient number of items that the assessment score will reflect both mastery of skills tested and predicted mastery of domain skills not tested.

To demonstrate alignment here it is necessary to apply deductive reasoning. That is, we need to establish a hypothesis for what outcomes we would see if the assessment is aligned and then assess to confirm or disconfirm that hypothesis. This is usually done by comparing scores on the new assessment with ones on an established and accepted as a “gold standard” measure. Thus, for evaluating any assessment for alignment to state standards one would evaluate the relationship or consistency of scores achieved on the (new) test measure with the state test (gold standard measure).

Score Comparison Methods

Statistical evaluation of the relationship between two sets of data is typically done with one of two main methods: (a) correlation, or (b) “Receiver Operating Characteristic” (ROC) Curve.

- Correlations. The correlation method includes rank ordering the students’ scores on the two tests and comparing how well these scores “match up” in order. This method is widely used and statistically sound but can over- or underestimate the test similarities because the two assessments might not measure skills in the same ways.

- ROC curve. This procedure compares the classification accuracy made using the two compared assessments. That is, if a student is classified as “proficient” based on scores from one measure, is he or she similarly classified using the score from the other measure. ROC Curve analyses produce a variety of statistics including a measure of “sensitivity”, “specificity”, and “area under the curve” (AUC). The AUC statistic indicates the overall strength of the classification consistency between the two measures and the sensitivity/specificity statistics provide information regarding the frequency of two types of errors made in classification. One type of error in this case would be for the assessment to classify as proficient someone who is not actually proficient on the state test, and the other is to classify someone as not proficient when he or she actually achieves a proficient score on the state test.

Formative – Summative Continuum

One additional issue of importance to consider when considering alignment of a test to standards has to do with the point in time when the assessment is administered in the school year relative to the point in time when the standard is expected to be mastered. This issue is often referenced through classification of an assessment routine as being in a particular position on a continuum from “formative” to “summative.”

- Formative assessments are those that are administered prior to or during learning for the purpose of understanding a student’s current skills relative to the objective or standard. These formative assessments often focus on subskills necessary to master in an incremental fashion as one builds toward the highest level of skill represented by the standard.

- Summative assessments are evaluations of the student’s mastery or accomplishment of a standard at a point in time when it is expected that all students should demonstrate the skills reflected in the standard.

- Benchmark assessments are those whose timing of use falls at the midpoint of this continuum from formative to summative assessment. These assessments are typically used with larger groups of students to track the performance of the group in progressing toward standards. These are given two or more times during the school year and provide information to educators about whether or not the instruction provided is accomplishing the task of moving the students toward mastery of the standards.

When considering alignment of formative or benchmark assessments to standards it is important to recognize that in order to assess progress toward end-point outcomes, items reflecting the full range of skills need to be included in the assessment. This can lead to confusion or anxiety for educators who observe that some of the skills being presented on the test may not have yet been taught. So, for assessments that are used for formative or interim/benchmark purposes, alignment to the standards will require that tests include items that are likely to be taught and mastered early in the instructional sequence as well as, items that reflect the highest levels of mastery for that standard. Observations that students in the middle of the school year are encountering items that reflect end of the year skills does not indicate a failure in alignment to the standard, but rather demonstrates clear alignment with the standard and indicates the student’s current relative mastery of all the skills to be learned.

In this way, the alignment of assessment with standards differs from commonly held beliefs that assessments should only cover content that has been previously instructed. If your purpose for assessment is to track student progress toward a common learning target, scores can only improve as you approach the target, if the full range of skills is assessed. In this scenario, cut scores or target scores are generally established to reflect expected performance at different points in the school year. Thus, students are not expected to achieve the highest scores early in the year and the fact that there are items they are unable to get correct is expected. Assessing the mastery of individual subskills necessary for eventually meeting the standard as they are taught is typically referred to as mastery assessment and is frequently accomplished through unit tests and quizzes.

Considering all of these perspectives in determining the alignment of an assessment to state or the CCSS is fairly complex because one must consider multiple issues to draw an accurate conclusion. However, this task can be broken down by considering first each of the elements separately and then evaluating the results in total to make a determination regarding the sufficiency of alignment for any measure being considered.

Alignment of Content

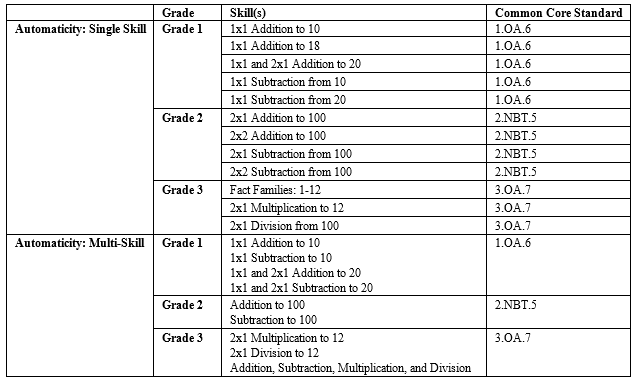

First, one would look at the content of the measure to ensure that the items included are reflective of the skills or a subset of key skills representative of the standard. Test publishers generally provide a table of examples to show alignment of test items with the content of the standards or classify tests and subtests according to the standard or standards covered. In the case of FAST™, both procedures for demonstrating test item content alignment with the CCSS were used. Detailed discussions of the alignment of test item content for each test are provided in the FAST™ Knowledge Base. Here is the FAST™ item alignment for CBM Math Automaticity and Math Process.

FAST™ item alignment for CBM Math Automaticity and Math Process

FAST™ Classification Accuracy

Evaluating FAST™ assessments for alignment with standards requires recognition that the FAST™ assessments can be used to address multiple questions and provide information to guide screening decisions, decisions regarding progress resulting from intervention and can provide information about overall program effects. For each of these application categories, the data that reflect alignment will require the deductive reasoning approach and consideration of the statistical strength of relationships between FAST™ assessments and measures considered to reflect the “true” performance outcome for tested students. In the realm of standards alignment, the performance outcome objective is generally described as demonstration of “proficiency” on the established standards as measured by the state test

Extensive evaluation of FAST™ assessments has been conducted comparing results with several state tests (GA, MA, MN, and WI) as well as with the Measures of Academic Progress (MAP) for students in grades K-10 (Fastbridge, 2015). These analyses provide evidence that FAST™ reading measures meet or exceed standards for classification accuracy at the “convincing evidence” level (62%) or “partially convincing evidence” level (32%) for an overall outcome that included 95% of the individual comparisons across grades and states. In addition, correlation analysis demonstrated that a majority of the validity coefficients meet the recommended level of correlation at or above .70. Similarly robust outcomes were observed when assessing math measures although the researchers did not provide the specific percentages of results falling in each strength-of-evidence category.

Additional details and specific results of these analyses can be found here:

Summary

While educators are working hard to ensure that students meet identified state standards, considerations of how an assessment aligns to standards have become critically important. Unfortunately, understanding alignment of assessment tools to state standards has been complicated by controversy surrounding the adoption of CCSS as a uniform benchmark for student learning expectations. This has resulted in some continued variability in specific standards set in a handful of states that have not chosen to adopt the CCSS.

Educators recently have appropriately been expecting that test developers demonstrate how assessments are aligned with CCSS (or similar state standards) so that they can determine whether students are on-track for, or have met state standards. In this context, many test developers including those at FAST™ have incorporated procedures to ensure and demonstrate test alignment with CCSS. This has provided some assurance to educators in states using the CCSS that performance on these assessments can be useful for understanding student skill development relative to CCSS.

However, for educators in states that have not adopted CCSS, this has produced some angst and anxiety because some perceive that assessments aligned to CCSS will not be useful in their states with their students. As explained, states that have not adopted CCSS generally appear to have adopted very similar or equivalent standards. For this reason, assessments shown to align with the CCSS are likely to predict student performance on state-specific standards. At this point, all states have adopted or set standards that align with College and Career Readiness and these standards appear to be substantially similar. Where differences exist there is strong evidence that the CCSS have set the bar for rigor higher than those implemented by individual states. Thus using tests like those in the FAST™ system, that have demonstrated alignment with CCSS, educators can be confident that students who have met FAST™ benchmarks will be highly likely to meet state benchmarks as well. Important here is the need to remember that assessments capable of both predicting performance on state tests and tracking progress toward achievement of state standards must include items across the entire range of skills necessary for demonstrating proficiency in the standards. Thus, teachers should not be disturbed by observing during the instructional year, that test items of greater complexity than have been taught will be presented to students. This does not indicate that a test is not aligned with the standards, but rather that the standards are the endpoint goal and instruction is incrementally presented to move students from low levels of skills to full proficiency.

References

Fordam Institute. (2010) The state of state standards-and the common core-in 2010. Washington, DC.

FastBridge Learning. (2015) Formative Assessment System for Teachers: Abbreviated Technical Manual for Iowa. Version 2.0. Minneapolis, MN.

National Governors Association Center for Best Practices and Council of Chief State School Officers. (2017). Common Core State Standards. Retrieved from: http://www.corestandards.org.

Peterson, P., Barrow, S., & Gift, T. (2016). After common core, states set rigorous standards. Education Next. Summer 2016, 9-15.