Test Norms: What Are They and Why Do They Matter?

Written By: John Bielinski, Ph.D. and Rachel Brown, Ph.D., NCSP,

The central purpose of a score on any classroom assessment is to convey information about the performance of the student. Parents, educators and students want to know whether the score represents strong performance or is cause for concern.

But, to evaluate a score, we need a frame of reference. Where do educators find this basis for comparison? We have your answers.

In this post, we review exactly how test norms are developed and how they can assist teachers with instructional decision making.

Table of Contents

- Test Norms and Percentiles

- The Reference Group

- Sample Size and Demographic Matching

- Relationship Between Percentiles and Ability

- FAQs: Common Educator Questions About Test Norms and Benchmarks

- FAQs: Test Norms and Benchmarks Within FastBridge

- Summary

Test Norms and Percentiles

Whether we realize it or not, our tendency is to characterize all measurable traits normatively. This natural tendency is one of the reasons why normative scores are so useful for describing academic performance.

A norm can be thought of as the characterization of the typicality of a measured attribute in a specific group or population.

Here we will limit measured attributes to test scores. The measurement of performance begins as a raw score — such as the total number correct. Then, the score needs to be translated into a scale that indexes what is typical. An especially convenient and readily understood scale is the percentile rank.

A percentile represents the score’s rank as a percentage of the group or population at or below that score. As an example, if a score of 100 is at the 70th national percentile, it means 70% of the national population scored at or below 100.

Percentiles generally range from one to 99, with the average or typical performance extending from about the 25th to the 75th percentile. Scores below the 25th are below average and scores above the 75th are above average.

Although percentiles are familiar and are frequently used in educational settings, we rarely consider the development and underlying properties of percentiles.

We will briefly examine three aspects of percentiles that contribute to their quality and interpretation, to provide a basis for understanding why national norms in FastBridge were updated and how the update compares to prior norms.

What Are Test Norms?

The term “norms” is short for normative scores. Normative scores are ones collected from large numbers of students with diverse backgrounds for the purpose of showing “normal” performance on a specific assessment.

Normal performance refers to what scores are typically observed on an assessment by students in different grades. For example, students in lower grades are not expected to know as much as students in higher grades.

If students in all grade levels completed the exact same test, younger students would be expected to obtain lower scores than older students on the test. Such a score distribution would be considered “normal” in relation to student grade levels.

Test norms represent the typical or “normal” scores of students at different grades or learning levels. In addition to scores being different for younger and older students, they can also vary among students in the same grade because of differences in prior learning and general ability.

Importantly, test norms can only be developed for tests that are standardized. Standardized tests are ones that have specific directions that are used in the same way every time the test is given. This is because test scores can only be compared when the test is identical for all students who take it, including both the items and the testing instructions.

Comparing scores from tests with different items and directions is not helpful because the students did not complete the same tasks; score differences are probably due to the different questions on the tests. Standardized tests allow score comparisons because the students all answered the same questions under the same conditions.

How Are Test Norms Developed?

In order to understand the differences in students’ scores within and between grade levels, test developers develop and try out test questions many times before the final test is complete.

Once the test is complete, the developers then give the test to what is called a “normative sample.” This sample includes a selection of students from all of the grades and locations where the final test will be used. The sample is designed to allow collection of scores from a smaller number of students than the entire group that will eventually take the test.

But, the sample needs to be representative of all the grade levels and backgrounds of students who will later take the test. This is important because if the normative sample includes only students from a certain grade level or part of the country, the scores will not be similar to all other student grades levels and backgrounds.

In order to make sure that the normative sample is representative, a certain number of students from each grade as well as from applicable geographic regions are selected. For example, in gathering a normative sample for a state assessment a certain number of students from each grade level in each county or school district could be selected.

In addition to selecting students based on grade and location, normative samples need to consider other student background features. For this reason, students with disabilities, who are learning English, and from different socioeconomic backgrounds need to be included.

One of the ways that test publishers decide how many students to recruit for a normative sample is to use data from the U.S. Census. The U.S. government conducts a survey of all the people in the country every 10 years.

This census provides information about how many people of what backgrounds live in each state. Such data can help test publishers know the backgrounds of the students who attend schools in different regions.

After identifying the backgrounds representative of students needed for the sample, the test publisher will recruit students and have them complete the assessment according to the standardized rules. Once all of the sample participants have completed the assessment, the scores are organized so that they can be analyzed.

One of the major ways that the scores are organized is to put them into sets by grade levels and then rank order them. Rank ordering means to list them from lowest to highest. The rank orders by grade level can then be converted to percentile rankings.

Percentiles provide a way for teachers to know which scores are below, average, or above expectations for each grade level. Percentile ranks group the scores in relation to the number of students whose scores were similar or different to other students. Percentile ranks range from 1% to 99% and can be used to understand what scores are typical — or average — for students in each grade.

How Do Test Norms Assist Teachers?

Scores collected as part of a normative sample that represents all of the types of students who will later take a certain test offer a way for teachers to know which scores are typical and which ones are not typical.

To determine if a score is typical, the teacher compares it to the available test norms. For example, if a teacher wanted to know if a score of 52 on a standardized and normed test is in the average range, he or she can consult the norms for that test.

If the test has a range of scores from 0 through 100, and the average score is 50, then a score of 52 would be considered normal.

But if the test has a range of scores from 0 through 200 and the average score is 100, 52 would be considered a low score.

The teacher could then look and see the percentile ranking for a score of 52. The percentile ranking would indicate what percentage of students in the normative sample scored below and above 52.

Such information would help the teacher to know how far below average the score is. The teacher could also identify how many points the student needs to gain in order to reach the average range of scores.

In any class there will always be a range of student abilities and scores. If teachers can learn how each student’s score compares to the test norms, they can develop instruction that matches each student’s learning needs.

It is important to note that test norms can be used to identify both lower-achieving and higher-achieving students. In some classes, there might be many students who are well above the average score. In such cases, the teacher needs to develop lessons that help students advance to even higher levels.

Unlike benchmarks, test norms provide information related to all students’ current skills. Benchmarks show which students have met a single specific goal but norms indicate all students’ standing compared to the distribution of scores from a normative sample.

The Reference Group

As stated above, test norms are necessarily interpreted in relation to a reference group or population. Yet, we rarely consider the who composition of the reference group beyond general terms such as national or local.

But, the composition of the norm group, the demographic characteristics of the group, is essential. The greater the precision with which the group can be defined, the more meaningful the interpretation of the normative scores like percentiles.

The figure below shows examples of reference groups based on geographic range and demographic composition on a continuum from broad to narrow. National test norms are typically broad with respect to geographic range and demographic composition.

However, there are instances in which local or national test norms may be based on a subset of the student populations such as English learners (EL). What is important is that the norm group is well-defined along these dimensions.

The technical documentation for the new FastBridge national norms provides a detailed description of the demographic composition of the norm groups and how they compare to the entire U.S. school population in each grade.

By closely matching the percentages of the U.S. school population by gender, race/ethnicity and socio-economic status (SES) in each grade for each assessment individually, we can accurately generalize performance on FastBridge assessments relative to the national school population.

Another positive consequence of carefully matching demographic percentages is that the norms are stable across grades — they are less prone to possible biasing effects of varying composition, especially relative to SES across grades. The prior FastBridge national test norms also had broad geographic and demographic composition but were not as tightly defined relative to demographic percentages.

Sample Size and Demographic Matching

Another important characteristic is the size of the norm group. Test norms become more stable as sample size increases.

What this means is that if we were to compare percentiles derived from many random samples from a population, the score associated with each percentile will vary somewhat across samples. That variability tends to be smaller near the middle of the percentile range (25th – 75th percentile) compared to the tails of the range (1st – 10th and 90th – 99th percentile). This effect can be easily seen through differences in local test norms for a given grade across years.

Importantly, sample size does not ensure accuracy. Accuracy refers to how closely the test norms match the true population values that would be obtained if they were based on the scores from all U.S. students.

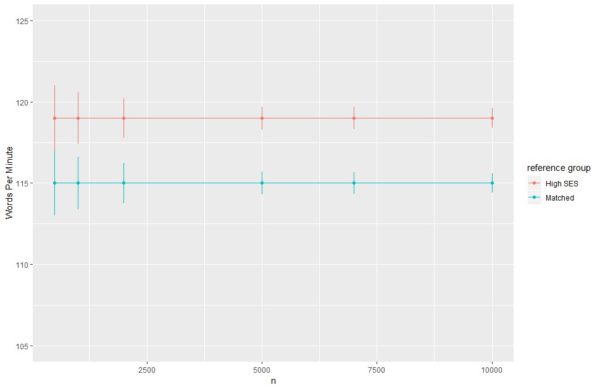

To demonstrate the role of demographic match and sample size on accuracy and stability we simulated score distributions that closely match the words per minute (wpm) oral reading rates in Grade 2 on CBMreading for two conditions: sample size (500, 1,000, 2,000, 5,000, 7,000, 10,000) and SES (matched to U.S. and higher than U.S.).

The results are displayed in the figure below. The horizontal lines represent the wpm score associated with the 40th national percentile (some risk cut score). The orange line is the High SES sample and the teal line is the Matched SES sample.

Because the teal line is matched to the population, it also represents the true score. For the High SES group, we simulated an effect size bias equivalent to one-tenth of a standard deviation, which is a very small effect.

The vertical lines represent the amount of variation in the score associated with the 40th percentile across samples. The longer the vertical line, the more variation and less stability.

Note that the vertical lines are very small with all samples. At 5,000 the instability is equivalent to only one word per minute! This is a negligible effect.

Additionally, the worst-case scenario for the Matched SES group, which is the top or bottom of the vertical line at each point, is always better than the best-case scenario in the High SES group.

Two conclusions can be drawn from this simulation. First, a norm sample of 5,000 is sufficient to generate highly stable results. Second, demographic matching can be more important than sample size for samples as small as 500.

Relationship Between Percentiles and Ability

A third property of percentiles that is important to consider when comparing performance across students or comparing the prior FastBridge norms to the new demographically matched national test norms is the nonlinear relationship between percentiles and ability.

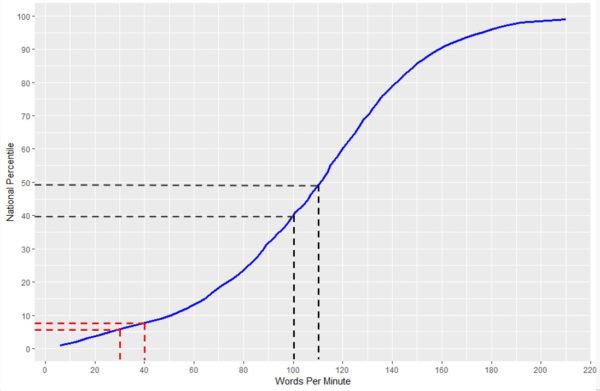

The blue curve in the figure below shows the relationship between percentiles (vertical axis) and CBMreading wpm scores. The curve is S-shaped and not linear. What this means is that for a given difference in ability (e.g., 10 wpm) the difference in the percentile varies depending on the position in the percentile range.

The dashed lines represent a 10-point difference in wpm. For oral reading rates a 10-point difference is nearly trivial and in fact, it corresponds to the amount of random error present in each student’s score. Thus, a 10-point difference may be no more than random error.

A score of 100 wpm corresponds to the 39th percentile and a score of 110 corresponds to the 49th percentile — a 10 percentile point difference! Whereas the difference between a score of 30 wpm and 40 wpm represent just a two-point percentile difference.

When comparing the new demographically matched FastBridge norms to the prior national test norms, the differences in ability levels for a given percentile are generally small. For CBMreading, Grades 2 – 6, the score differences across the some-risk segment are all less than 10 wpm, while in the high-risk segment the score differences range from seven to 14 wpm.

A similar pattern occurs with other FastBridge assessments. Although these differences are real and will generally result in a smaller percentage of students flagged as at-risk, they should be interpreted in light of the ability difference and not the number of percentile points.

As demonstrated here, interpretation of the size of percentile differences depends on the point along the ability continuum the difference occurs.

FAQs: Common Educator Questions About Test Norms and Benchmarks

What Is the Difference Between a Test Norm and a Benchmark?

This is a common question and can be confusing because many FastBridge screening reports display both test norms and benchmarks to support your decision-making.

A normative comparison allows you to compare a student’s score to that of her peers. When driving for instance, the flow of traffic is a normative comparison. Depending on the location and time of day, the flow of traffic may change given other drivers on the road. FastBridge has both local and nationally representative norms, allowing you to compare students’ scores to both local peers and students nationwide.

Benchmarks, on the other hand, support the comparison of norms to a predetermined criterion. The speed limit is an example of a benchmark. It doesn’t change based on who is driving on a given road. It provides a constant comparison to determine if your speed is safe in the given setting.

Why Do My Test Norms and Benchmarks Tell Me Different Things?

Let’s return to the driving example. If we compare my speed of 60 miles an hour to a speed limit of 55, we may decide that I was driving too FastBridge. However, if we compare it to the fact that all other drivers on the road are passing me and driving faster than 60, we may decide I was driving too slowly.

The comparison we make may lead to different decisions. A police officer may be more attuned to the criterion, the speed limit, while a passenger in the car may pay more attention to the flow of traffic, or norm.

Similarly, when comparing screening data to norms or benchmarks, you may see some different patterns. For instance, if your school has students who are high performing, you may have students whose scores are low when compared to local test norms.

Those same students’ scores may meet low risk benchmarks and be within the average normative range nationally, though.

By contrast this data from FastBridge displays a situation in which the local population has more students with scores at high risk; you may see that some students may appear to be in the average range when compared to local test norms but be at risk of not meeting standards in reading or math when compared to benchmarks.

While this can be confusing, these different types of information are essential when deciding who may need additional intervention to be successful.

Which Does FastBridge Recommend: Test Norms or Benchmarks?

It depends on the question you’re asking and the decision you’re making.

Test norms are best used when decisions are being made that require you to compare a student’s score to that of other students.

When you want to determine if a student is at risk of not meeting standards, benchmarks should be used.

We recommend using benchmarks to determine which students need additional support, then using norms to decide if that support needs to be supplemental or provided through Tier 1 core instruction.

FAQs: Test Norms and Benchmarks Within FastBridge

How Were the Normative Ranges Set?

![]()

The normative ranges were set to show where most students’ scores fall and align with typical resource allocation in schools. Most schools do not have the resources to provide supplemental intervention to more than 20% – 30% of students.

FastBridge norms make it clear which students fall in those ranges. Additionally, if a student’s score falls between the 30th and 85th percentile ranks, the score is consistent with where the majority of students are scoring. That range includes students who are likely receiving core instruction alone.

Remember, norms are not able to be used to indicate risk of poor reading or math outcomes.

So, students whose CBMreading score is at the 35th percentile rank may be at-risk in the area of reading, even though they will likely not receive additional support outside of core instruction.

That’s why FastBridge recommends that benchmarks are used in conjunction with test norms to make decisions about how to meet student needs. For example, a core intervention may be appropriate in cases where a large number of students score below benchmark but are within the average range compared to local norms.

Can We Set Custom Benchmarks in FastBridge?

FastBridge allows for District Managers to set custom benchmarks. If your school has done an analysis to identify the scores associated with specific outcomes on your state tests, you can enter those custom benchmarks into the system.

The Knowledge Base has an article that provides instructions to set custom benchmarks. When completed, these will be displayed on all FastBridge reports.

Why Are Local Test Norms Missing From Some of My Reports?

Since test norms compare students’ scores to those of other students, if only a portion of a school or district is assessed, those comparisons could be misleading.

For instance, if we only screen students who we have concerns about, a student’s score may look like it’s in the middle of the group, when in reality, the student is at-risk of not meeting expectations in reading or math.

Because of this, FastBridge will only calculate and display local test norms when at least 70% of the students in a group have taken the screening assessment. If you have fewer than 70% of students screened with a specific assessment, we recommend using national test norms or benchmarks to identify student risk.

Why Are CBMreading Benchmarks Higher Than Those for Other Published Assessments of Oral Reading Fluency?

Benchmarks are used to determine risk of not meeting standards. Similar to a speed limit changing depending on the setting (e.g., highway versus school zone), benchmarks will differ depending on the conditions of the measure used.

In CBMreading, specifically, one of the reasons FastBridge passages have extremely high predictive validity is due to their highly-structured nature. The FastBridge CBMreading passages include decodable words as well as high frequency English words so that they are as easy to read as possible.

They were developed specifically to be able to screen and monitor student progress with both predictive validity and high sensitivity to growth. As with any assessment, you should compare scores on FastBridge assessments with only FastBridge norms and benchmarks.

How Are Growth Rates for Goals and the Group Growth Report Set?

The growth rates used in the FastBridgeBridge Progress Monitoring groups are based on research documenting typical improvement by students who participate in progress monitoring.

The growth rates in the Group Growth Report are based on the scores corresponding to the FastBridge benchmarks, however, they can be customized when accessing that report.

How Are Rates of Improvement (ROI) Related to Norms and Benchmarks?

The rates of improvement (ROI), or growth rates, you’ll find in various parts of FastBridge are derived from our product’s normative data. The growth rates are developed based on the typical performance of students in the national test norms at every fifth percentile ranking.

You can find these growth rates in the normative tables in the Training and Resources tab within FastBridge. This section of the system also contains information about interpreting FastBridge norms and benchmarks.

Ultimately, while the two ideas have different applications, we know schools use test norms and benchmarks together and separately to aid in decision-making. Because of that, you can see both on many of our reports.

Summary

Test “norms” — short for normative scores — are scores from standardized tests given to representative samples of students who will later take the same test.

Norms provide a way for teachers to know what scores are typical (or average) for students in a given grade. Norms also show the range of all possible scores on the test at each grade level and the percentile ranks matched to each score.

Unlike benchmarks, norms provide information about all students’ relative performance on a test, whether at the low, middle or high levels. Teachers can use norms to identify each student’s current proficiency as compared to other students, as well as to identify which students need remedial, typical, or accelerated instruction.