7 Methods for Measuring Student Growth

Back in the days of NCLB, schools were given credit for the percent of students achieving the state’s “proficient” level, regardless of how far students progressed to proficiency.

However, in the past few years, accountability has expanded the proficient view of achievement by recognizing that some students have much farther to go to reach proficiency, even though it remains the minimum target for everyone. This has led policymakers to look at ways to measure academic growth via growth models.

What is Student Growth?

To put it simply, student growth is how much academic progress a student has made between two points in time. For example, this could be from the start of the year to the end of the year, or from Year 1 to Year 2.

But measuring student growth is not a simple matter. There are many different approaches and models that exist, with each one having its own proper implementation and use cases. No matter how you measure growth, all methods of measuring growth depend on several factors to be valid and reliable.

First, you need to have good assessments. These assessments should feature quality items, number of items, standards, DOK levels, P-Values, point bi-serial correlations and so forth.

Additionally, there needs to be an alignment between the assessment, curriculum, and methods of instruction. Students need to be motivated and engaged, so we have some idea of the reliability of the data that is being provided to us through the assessments.

With that being said, here are seven different methodologies and approaches we can take when it comes to measuring student growth.

Models of Measuring Student Growth

Gain Score Model

Measures year-to-year change by subtracting the prior year (initial) score from the current year (final) score. The gains for a teacher are averaged and compared to overall average gain for other teachers. It’s quite easy to compute and can be used with local assessments. We’ve also had state accountability assessments use the gain-score model as well, and it's probably the most basic model available. The problem is that it doesn’t account for initial achievement levels, it’s just a basic calculation of change in score for students.

Value-Added Models (VAM)

This is frequently used for teacher evaluation that measures the teacher’s contribution in a given year by comparing the current test scores of their students to the scores of those same students in previous school years. In this manner, value-added modeling seeks to isolate the contribution that each teacher provides in a given year, which can be compared to the performance measures of other teachers.

VAMs are considered to be fairer than the gain score model since it considers potentially confounding context variables like past performance, student status or family income status. They’re generally not used with local assessments, but are intended for state accountability assessments. Typically, the calculations of these models can be a little complex, and you’ll need quite a bit of data for that to take place.

VAM – Covariate Adjusted Approach

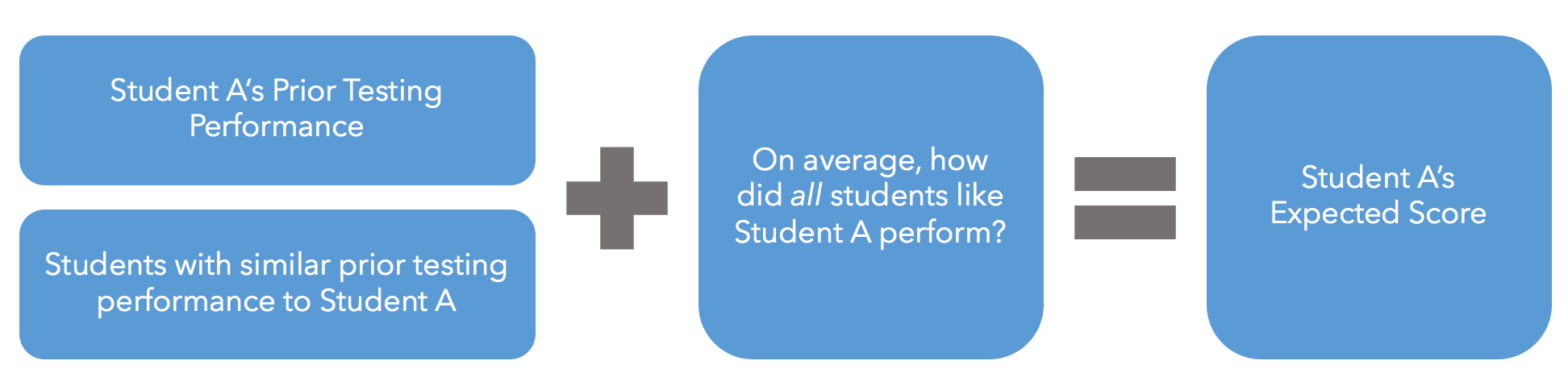

In this model, the growth is measured by comparing students’ expected scores with their actual observed scores. A student’s expected (or predicted) score is obtained by looking at prior test scores over multiple years, as well as students with similar testing performance in those prior years and other student characteristics, and then estimating how that group of students would be expected to score (on average) on that test.

The growth measure is related to the number of scale score points a group of students scored above or below their expected score, which takes into account their prior testing performance.

Note, one of the downsides with this approach is that it requires several years of “matched” data for accuracy. In other words, you can’t just use any random group of students for prior testing performance—you need to have that data linked to all the same students over several years to have an accurate VAM model.

Student Growth Percentile Model (SGP)

A student growth percentile (SGP) describes a student’s growth compared to other students with similar prior test scores. Percentiles are used to rank a student’s growth compared to others, which is more a straightforward and easier-to-interpret method.

The student growth percentile allows us to fairly compare students who start at different levels with similar students. This is called “banded growth.” This model can be used with local assessments, such as a pre-test or post-test model.

Unlike VAMs, each student receives an individual percentile score. In this model, students will always be categorized as “winners” and “losers” because it’s going to be normalized around a percentile rank model. Therefore, some students will always be high percentile, and some will be low percentile.

Effect Size Approach

Effect size is a way of quantifying the size of the difference between two groups that is easy to calculate, understand and be used with any outcome in education (or even in fields like medicine).

The goal of the effect size is to provide a measure of the “size of the effect” or impact from instruction rather than pure statistical significance which gets confounded with effect size and sample size. Effect size scores are equal to “Z-scores” of a normal distribution and have the same possible range of scores. Effect size scores will typically range from -1.5 to +1.5. If a teacher receives an effect size of +1.0, their students grew 1 standard deviation of test score points more than the average teacher.

Computer-Adaptive Approaches

These have become quite common and typically use a “Vertical Scaled Score” to show growth a single year over multiple years on the same scale. This method is ungraded and could be similar to measuring a student’s height—as a student grows on their vertical scale, they are increasing on their academic skills accordingly.

They can also overcome the limitations of fixed-form assessments (i.e., a subset of items that can’t be adjusted). In other words, adaptive assessments will adapt downward or upward in difficulty based on a student’s performance.

Progress Monitoring

Progress monitoring is different in that it’s typically criterion-referenced assessments, they’re not normed (i.e., at risk, on target). They’re efficient to administer and the data can be displayed to show the “absolute discrepancy” between where the student is performing relative to the expected target or level. The data can be graphed to measure a change in rate of progress relative to expected growth over time.

*****

Illuminate Education is a provider of educational technology and services offering innovative data, assessment and student information solutions. Serving K-12 schools, our cloud-based software and services currently assist more than 1,600 school districts in promoting student achievement and success.

Ready to discover your one-stop shop for your district’s educational needs? Let’s talk.

Exactly what data is used to determine an individual student’s growth within a school year?

From one school year to the next?

(And how do these scores factor in the learning / non learning during summer break that has nothing to do with schools?)

What specific “local assessments” are used?

Is “local assessment” data submitted by districts, or is this data mined by government / organizations to create scores?